Ai-Da, the world’s first ultrarealistic robot artist, getting ready to address the House of Lords about art, technology and machine learning Rob Pinney/Getty Images

The Post-Human Economy

by Katherine Dee | Jan 10, 2023

We’re supposedly on the brink of an artificial intelligence breakthrough. The bots are already communicating—at least they’re stringing together words and creating images. Some of those images are even kind of cool, especially if you’re into that sophomore dorm room surrealist aesthetic. GPT-3, and, more recently, chatGPT, two tools from OpenAI (which recently received a $29 billion valuation) are taking over the world.

Each new piece about GPT-3 tells a different story of displacement: Gone are the halcyon days of students writing original essays, journalists doing original reporting, or advertisers creating original ad copy. What was once the domain of humans will now be relegated to bots. The next technological revolution is upon us, and it’s coming for creative labor.

Timothy Shoup from the Copenhagen Institute for Future Studies seems confident that with chatGPT and GPT-3, the problem is about to get much worse, and that 99.9% of internet content will be AI-generated by 2030. The future, in other words, will be by bots and for bots.

Estimates like this scare people because they’re couched in the language of science fiction: a dead internet that’s just AI-generated content and no people. But isn’t that a lot of what the internet is already? It’s not as though we don’t know this, either—we are all aware of not only bots but of the sea of bot-generated content that exists on every social media platform and in every Google search.

Take me, for example. I can’t stop watching content farm-generated videos on TikTok. They’re simple: just an automated voice reading a Reddit post, usually overlayed on a clip from Minecraft or a mobile game. An increasing number of TikToks are like this—not viral dances nor recipes nor pranks, a generic content product engineered to command the maximum amount of human attention at the minimum possible cost to create.

They come in a few different templates. The Reddit posts, already mentioned; the uncanny valley of recipes that are just a hair off, like these odd, almost absurd cooking videos; voiceovers of news that could be true, or may very well not be. And then there are the “very satisfying” videos of people doing things like squeezing clay or letting slime drip between their fingers; random clips of Family Guy with a stock video of a person “reacting” to it. It’s a genre unto itself—there are even subgenres.

This mass-produced shlock, the “pink slime” of internet content, is, as Vice reports about the TikToks, created as a low-effort way to generate ad revenue. You pull a couple of levers, turn a couple of dials, figure out what viewers like, what the algorithms like, and fire. It’s tough to pinpoint exactly how much of this content is out there. What we know is nebulous: A lot of online content is fake—that is, nonhuman. What exactly “a lot” means, of course, varies from source to source.

What’s trickier is the stuff that isn’t immediately ignored. The TikTok videos, for instance. I like them, and I imagine others like them because they’re easy to watch—they’re entertaining in the way watching a spinning top or a ball bounce is entertaining. You can do it for hours, and you’re not totally sure why. They’ve even adapted to the modern tendency to divide your attention: Oftentimes a split screen will feature a play-through of something like Minecraft or a mobile game set alongside a video of someone, say, squishing clay in their hands.

Contacting another person will become a luxury product, available only to the wealthy who can afford to pay the ‘conscious human being premium.

This isn’t a phenomenon new to TikTok, either.

One might recall the head-scratching YouTube videos geared toward children, which, in 2017, made headlines after reporter James Bridle pointed out how disturbing so many of them were, often featuring characters from kids’ TV programs but with the bots adding “keyword salad,” “violence,” and “the very stuff of kids’ worst dreams”—for example, Peppa Pig getting tortured at the dentist.

Then, of course, there’s what you might call the “Wish” phenomenon: T-shirts, posters, wall decals, you name it, that serve a similar purpose. Again, mass-produced, bot-produced, not always sensical, and somehow, despite this, a great way to make a few extra bucks. And that’s just the interesting stuff—the stuff we might consume voluntarily, even if we know it’s garbage.

There’s SEO-generated content (once used to game Google searches), repurposed and repeated content, and bot-generated responses on social media, like Reddit, Quora, and of course, Facebook and Twitter. It’s unclear how much of it is even viewed by actual people and how much of it is “bot-to-bot” traffic (for bots, by bots, all to generate clicks), but we know it’s everywhere.

We are also getting better at producing it. A marketing manager from South Florida, E., shared that her firm relies on that annoying SEO debris, this “digital trash” that already litters search results. E. asked to remain anonymous for fear of her comments putting her job in jeopardy. E. oversees the creation of keyword-laden landing pages that bait users who are trying to Google something.

She used to spend a lot of time clicking around websites like Upwork, looking for reliable contractors who could quickly generate copy for her. These days, though, she’s taken on much of the workload herself: She plugs something into chatGPT, does some minor editing, and up it goes. I asked E. if she thought that these contractor copywriting jobs would disappear, especially as the models get better.

To her, a lot of the industry is “fat.” That is, there’s a lot of completely unnecessary work that she doesn’t imagine disappearing overnight. While the contractor copy wasn’t always reliable, the AI wasn’t always either. If anything, she said, marketing firms like hers will eventually employ people to design prompts and edit AI-generated writing.

Online content isn’t the only or the most significant field that chatGPT and GPT-3 will transform. Fields like law, customer service, content moderation, and software engineering will also undergo massive changes.

In the case of law, a field notorious for its grunt work, the responsibilities of a first-year law school grad may drastically change. AI might be able to scan large documents for certain clauses or loopholes; complete directives like “add an NDA clause”; or edit documents with an explanation of the changes. These are tasks that many people pay $500 per hour for, and they will suddenly be instantaneous and free.

Not all of this is hypothetical, either. Detangle AI offers summaries of legal docs designed to be “actually understood.” Another company, Spellbook, uses GPT-3 to review and suggest language for contracts, touting itself as a “Copilot for lawyers,” riffing off Github’s Copilot, which is something like a superintelligent autocomplete for engineers, a tool that’s completely transformed software documentation.

While this isn’t as sensational as AI defending someone in court or acting as a lobbyist (two stories that have recently made headlines), these are the types of changes that are less likely to get quashed by regulations or even just social norms … and more likely to happen “seemingly overnight.”

Customer service is one of the more interesting examples. We’ve all interacted with customer service chatbots—they’ve been commonplace since around 2017. They’ve also been improving each year, evolving from a limited (and frustrating) menu of options spit out at you from a pop-up into a service that, in some cases, is more pleasant to interact with than an actual customer service representative. According to Forbes, the AI-customer service niche is flourishing, with an explosion of startups such as Zoho, Levity AI, and Ada promising to make customer service even more frictionless.

We’ve long lived in a world with this type of automation, though—press 1 for this, press 2 for that—that’s nothing new. What happens, though, when AI for customer service isn’t just on the service end? For example, consumer-facing customer service apps like DoNotPay, use a large language model (LLM) to interact with customer service on behalf of its users. How many customer service interactions will include no human voice? What are the knock-on effects of that?

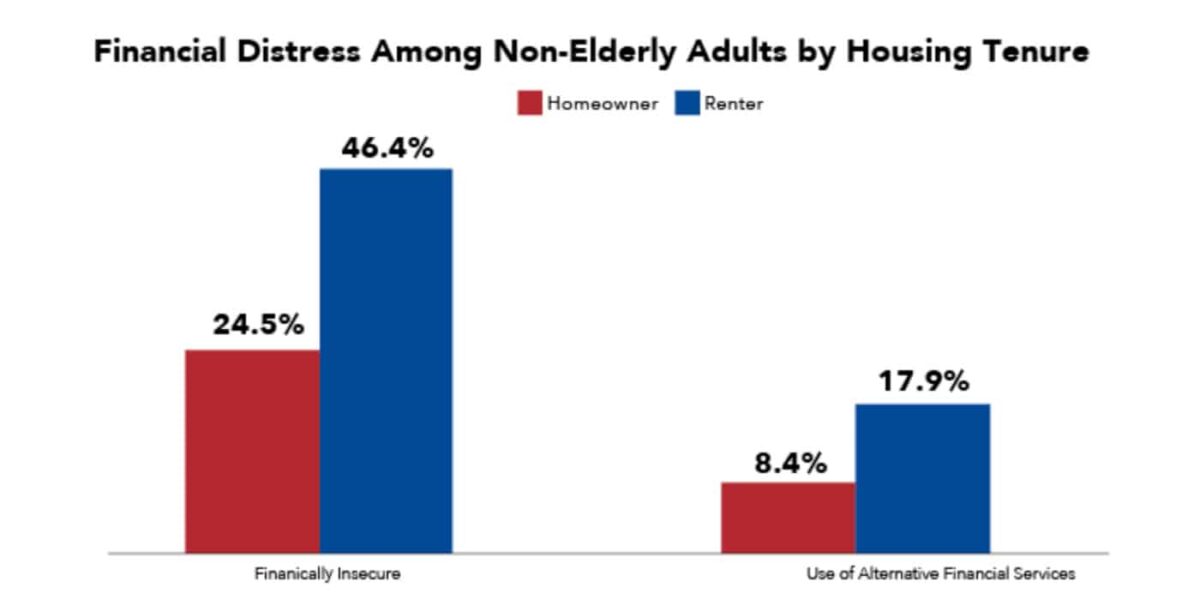

Security is now a growing concern. Anton Troynikov, a robotics engineer and researcher, points out that AI has a tendency to “hallucinate.” That is, it’s not always right—it’s not designed to be correct; it’s designed to be similar. Think sophisticated digital parrot, as opposed to a free-thinking digital assistant. If you’re dealing with something like a legal contract or your health insurance, you may not want to trust an AI to get all of the details right. But once the automation of these services becomes the industry standard (the way it already is when you call your cable or utility company) most people won’t have a choice. Contacting another person will become a luxury product, available only to the wealthy who can afford to pay the “conscious human being premium.”

People like Ryan McKinney, who founded Symbiose, a company that has been working on integrating AI and communication, argue that we should be optimistic. These are tools that increase not only our productivity but our ability to navigate a world that’s already changed and alienating. On our call, he offered the example of AI summarizing the contents of messages—a helpful tool, especially if you receive high volumes of communication. Once AI does that, what else can it do?

For Ryan, “AI can help us be more human.” Ryan thinks that AI supervision will explode—creating new jobs for people that will require more nuance, diligence, and domain expertise. “It’s a tool for leverage, not replacement,” he says. AI can free us from our more monotonous tasks, he believes, freeing us to do more meaningful work.

The argument echoes some of the more upbeat predictions from the late 1990s when proponents of globalization insisted that the loss of American manufacturing jobs would be offset as former factory workers were trained to take on new roles in the knowledge economy. Of course, it didn’t work out that way. But with the current pace of automation, jobs aren’t being sent overseas; they’re being “offshored” to the mass server farms powering the algorithms. And while it’s certainly possible that the first generation of AI will create new jobs for human supervisors, those jobs would also be vulnerable to automation as the AIs improve.

Jon Stokes, a writer and the co-founder of the tech publication Ars Technica, argues that the work we eliminate for “productivity’s sake” serves an important purpose. Grunt work builds skill, and as corny as it might sound, it also builds character. Stokes anticipates sweeping generational gaps as the perceived necessity for this work disappears. It’s a point that’s easy to overlook in a world where work has been weaponized and where “intern-level” tasks like taking notes on calls or writing dry copy have been monetized beyond our wildest dreams and farmed out to low-skill workers.

Another person I reached out to, who founded a massively successful mobile gaming company during the original app bubble, was also skeptical about the AI breakthrough. His concerns were less social than Jon’s—his thinking was cynical, albeit intuitive. A superintelligent autocomplete or even spell check is all well and good, and he understood the tendency for people to be utopian about it—but how can we be so sure it won’t bias the output? What if our content, code, or communications were deemed “problematic”? Wouldn’t it stand to reason that AI would prevent us from communicating certain things? One can take a gander at Dissident Right Twitter trolls to see what chatGPT won’t talk about.

Less divisively, in my own experience, I’ve hit a wall asking chatGPT about the occult and how to perform magic. The response I received was that these things are “outside of mainstream beliefs,” and “might be offensive to some people,” so the program couldn’t move forward. A friend, however—the writer Sam Buntz—had better luck. Maybe the problem was with how I phrased my prompt and not the question itself? Still a frustrating learning curve.

When I asked Nick Cammarata from OpenAI what he thought about this, he said, “Well, have you tried asking the model?”

Funny enough, I hadn’t. All I’d done was ask other people.

0 Comments