If AI Thinks George Washington is a Black Woman, Why Are We Letting it Pick Bomb Targets?

by Matt Taibbi | Feb 29, 2024

After yesterday’s Racket story about misadventures with Google’s creepy new AI product, Gemini, I got a note about a Bloomberg story from earlier this week. From US Used AI to Help Find Middle East Targets for Airstrikes:

The US used artificial intelligence to identify targets hit by air strikes in the Middle East this month, a defense official said, revealing growing military use of the technology for combat… Machine learning algorithms that can teach themselves to identify objects helped to narrow down targets for more than 85 US air strikes on Feb. 2…

The U.S. formally admitting to using AI to target human beings was a first of sorts, but Google’s decision to release a moronic image generator that mass-produces black Popes and Chinese founding fathers was the story that garnered the ink and outrage. The irony is the military tale is equally frightening, and related in unsettling ways:

Bloomberg quoted Schuyler Moore, Chief Technology Officer for U.S. Central Command. She described using AI to identify bombing targets in Iraq and Syria, in apparent retaliation for a January 28th attack in Jordan that killed three U.S. troops and injured 34. According to Moore, it was last year’s Hamas attack that sent the Pentagon over the edge into a willingness to deploy Project Maven, in which AI helps the military identify targets using data from satellites, drones, and other sources.

“October 7th, everything changed,” she said. “We immediately shifted into high gear and a much higher operational tempo than we had previously.”

The idea that the U.S. was so emotionally overcome on October 7th that it had to activate Project Maven seems bizarre at best. The Pentagon has boasted for years about deploying AI, from sending Switchblade drones to Ukraine that are “capable of identifying targets using algorithms,” to the “Replicator” initiative launched with a goal of hitting “1000 targets in 24 hours,” to talks of deploying a “Vast AI Fleet” to counter alleged Chinese AI capability. Nonetheless, it’s rare for someone like Moore to come out and announce that a series of recent air strikes were picked at least in part by algorithms that “teach themselves to identify” objects.

Project Maven made headlines in 2018 when, in a rare (but temporary) attack of conscience, Google executives announced they would not renew the firm’s first major Pentagon contract. 4,000 employees signed a group letter that seems quaint now, claiming building technology to assist the U.S. government in “military surveillance” was “not acceptable.” Employees implored CEO Sundar Pichai to see that “Google’s unique history, its motto Don’t Be Evil, and its direct reach into the lives of billions of users set it apart.”

But the firm’s kvetching about Don’t Be Evil and squeamishness about cooperating with the Pentagon didn’t last long. It soon began bidding for more DoD work and won a contract to provide cloud security for the Defense Innovation Board and a piece of a multibillion-dollar CIA cloud contract, among other things. Six years after its employee letter denouncing surveillance/targeting the military as “unacceptable,” Alphabet chief and former Google CEO Eric Schmidt chairs the Defense Innovation Board, and through efforts like Project Nimbus Google is essentially helping military forces like Israel’s IDF develop their own AI programs, as one former exec puts it.

The military dresses up justification for programs like Maven in many ways, but if you read between the lines of its own reports, the Pentagon is essentially chasing its own data tail. The sheer quantity of data the armed forces began generating after 9/11 through raids of homes (in Iraq, by the hundreds) and programs like full-motion video (FMV) from drones overwhelmed human analysis. As General Richard Clarke and Fletcher professor Richard Schultz put it, in an essay about Project Maven for West Point’s Modern War Institute:

[Drones] “sent back over 327,000 hours (or 37 years) of FMV footage.” By 2017, it was estimated for that year that the video US Central Command collected could amount to “325,000 feature films [approximately 700,000 hours or eighty years].”

The authors added the “intelligence simply became snowed under by data,” which to them meant “too much real-time intelligence was not being exploited.” This led to the second point: as Google’s former CEO Schmidt put it in 2020, when commenting on the firm’s by-then-fully-revived partnership with the Pentagon, “The way to understand the military is that soldiers spend a great deal of time looking at screens.”

Believing this was not an optimal use of soldier time, executives like Schmidt and military brass began pushing for more automated analysis. Even though early AI programs were “rudimentary with many false detections,” with accuracy “only around 50 percent” and even “the difference between men, women, and children” proving challenging, they plowed ahead.

Without disclosing how accuracy has improved since Maven’s early days, Schultz and Clarke explained the military is now planning a “range of AI/ML applications” to drive “increased efficiency, cost savings, and lethality,” and a larger goal:

To prepare DoD as an institution for future wars—a transformation from a hardware-centric organization to one in which AI and ML software provides timely, relevant mission-oriented data to enable intelligence-driven decisions at speed and scale.

This plan, like so many other things that emerge from Pentagon bureaucracy, is a massive self-licking ice cream cone.

Defense leaders first push to make and deploy ever-increasing quantities of flying data-gathering machines, which in turn film gazillions of feature films per year worth of surveillance. As the digital haul from the robot fleet grows, military leaders claim they’re forced to finance AI programs that can identify the things worth shooting at in these mountains of footage, to avoid the horror of opportunities “not being exploited.” This has the advantage of being self-fulfilling in its logic: as we shoot at more targets, we create more “exploding insurgencies,” as Clarke and Schultz put it, in turn creating more targets, and on and on.

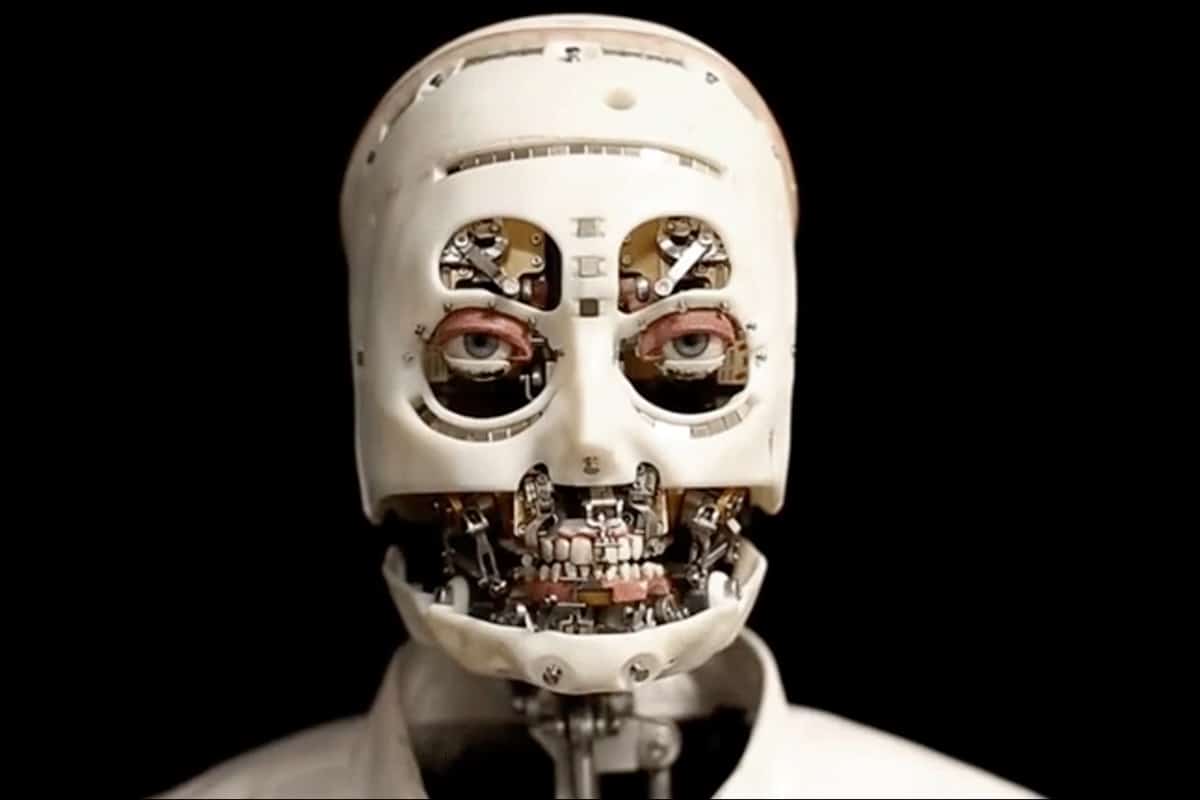

The goal is to transform into one endlessly processing aim-and-shoot app. If we’re always scanning for threats and design AI for “timely” responses, war is not only automated and constant, but attacks will tend to increase as budgets increase: more drones = more surveillance = more threats identified = more bombings. We’ll be a set-it-and-forget-it military: an aerial, AI-enhanced version of the infamous self-policing ED-209 robot from Robocop, complete with “false detections”:

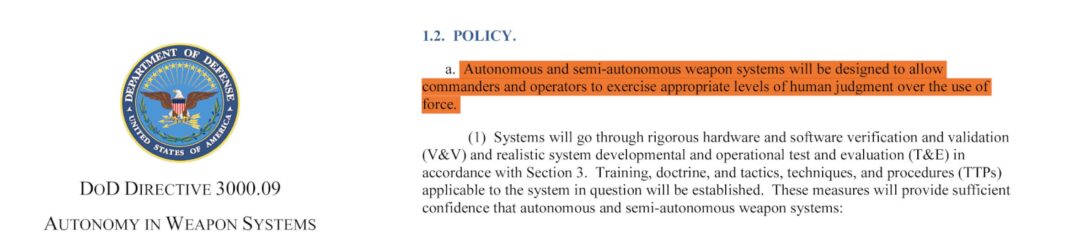

There was a quiet announcement last January “clarifying” the Pentagon’s “lethal autonomous weapons” policy from 2012. Contrary to popular belief, the U.S never required there be a “human in the loop” of AI-enhanced attacks. A new version of DoD directive 3000.09 was therefore written to “allow commanders and operators to exercise appropriate levels of human judgment” in autonomous attacks:

As seen in the Gemini rollout, and heard repeatedly across years of reports about AI products, the chief issue with such tools is that they make stuff up. The term Silicon Valley prefers is “hallucinate” — journalist Cade Metz deserves kudos for helping make it Dictionary.com’s word of the year in 2023 — but as podcast partner Walter Kirn points out, this language is too kind. The machines lie.

Stories about lawyers caught trying to introduce non-existent cases they “found” via programs like ChatGPT when preparing briefs are already too numerous to count (one even involved former Donald Trump lawyer Michael Cohen). A Snapchat AI “went rogue,” Air Canada’s chatbot offered a nonexistent discount to a customer, and the media outlet CNET got dinged for errors in AI-written articles. ChatGPT is facing a real court challenge after it invented a false legal complaint claiming a Georgia radio host embezzled money.

Despite this, companies like Google continue to develop AI-powered programs for newswriting, more appallingly fact-checking, and worst of all, killing human beings. The fact that AI “hallucinates” has not dented the drive to use these tools in fields where exactitude is critical: law and journalism are bad enough, but bomb targeting? The U.S. already has a lengthy record of “erroneous” dronings, including a famed 2021 case involving 10 civilians killed by a Hellfire missile in Kabul. Will removing human input reduce these “false detections”? Probably not, and because they won’t be nearly as meme-able as Gemini, you’ll be less likely to hear of them.

SEPARATED AT BIRTH? Computational “errors,” funny and non-funny editions

Make no mistake, though, images of George Washington as a black woman and the inevitable shots of Middle East hamlets blown up by mistake will stem from the same elite political instinct. This is the real horseshoe theory, a merger of the modern left ideology of force-fed utopianism at home and the old-school neconservative utopianism of “democracy promotion” by bayonet abroad. There’s a reason these erstwhile political adversaries now serve on the same boards, give to the same politicians. They’re the same over-empowered idiots, from the same schools and neighborhoods, armed with the same lunatic technology, surrendering finally to what they see as their destiny to run things together. What a coming out party they’re having.

0 Comments